22

machine vision

camera selection

understanding machine vision

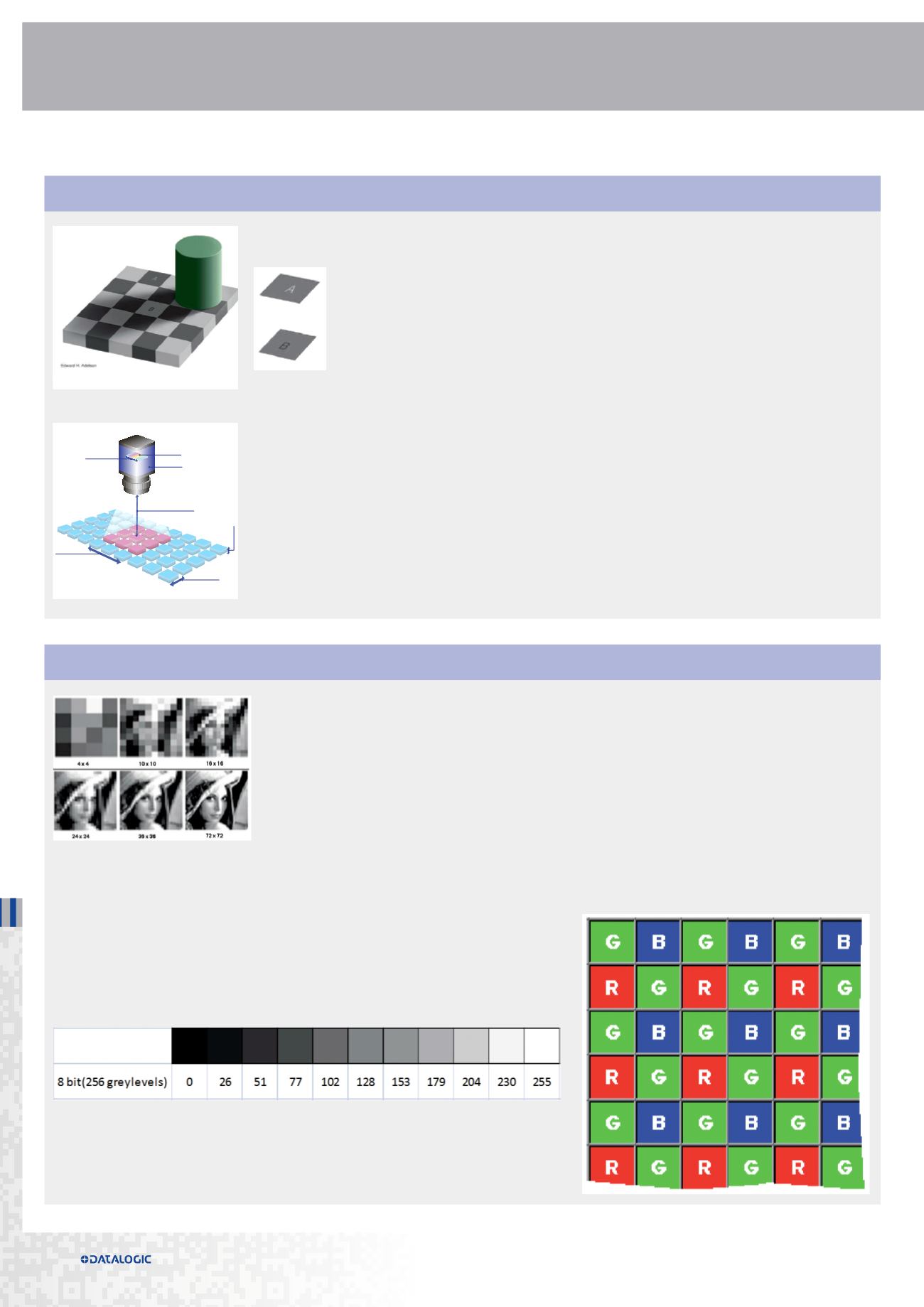

Machine vision is different from human vision. Human brain infers what eyes cannot see. It can create

composite images from multiple angles.

A and B squares seem to have different colors (i.e. A darker than B) but actually they do not. By

removing surroundings, they have exactly the same greylevel and this is how they are perceived

by an electronic eye.

A machine vision monochromatic (greyscale) image will only show differences in contrast. So, a

good image for machine vision is different than for human vision.

Machine vision glossary

Working Distance (WD):

The distance from the front of the lens to the object when in sharp focus.

Field-of-View (FOV):

The imaging area that is projected onto the imager by the lens. Note that most imagers

used today provided a 4:3 aspect ratio (4 units wide and 3 units high).

Depth-of-Field (DOF):

The range of the lens-to-object distance over which the image will be in sharp focus.

Note that the shorter a lens’ focal length is, or the more closed a lens’ aperture is, the greater the available

depth of field.

Resolution

: The ability of an optical system to distinguish two features that are close together. Note that

both imagers and lenses have their own respective resolutions. Always consider the benefits of better

camera resolution, but lens resolution is nearly always better than needed for most factory applications.

Resolution

Resolution is a measure that identifies the camera capability to acquire image details. Higher resolution

means more image detail. The convention is to describe the pixel resolution with the set of two positive

integer numbers, where the first number is the number of pixel columns (width) and the second is the

number of pixel rows (height), for example as 640 by 480. Another popular convention is to cite resolution as

the total number of pixels in the image, typically given as number of megapixels, which can be calculated by

multiplying pixel columns by pixel rows.

Acquisition (frame) rate

Frame rate is the frequency (rate) at which a camera is able to acquire consecutive images (area scan camera) or consecutive lines (line scan camera).

Frame rate is typically expressed respectively in Frames Per Second (FPS) or Thousands of Line per Second (KHz).

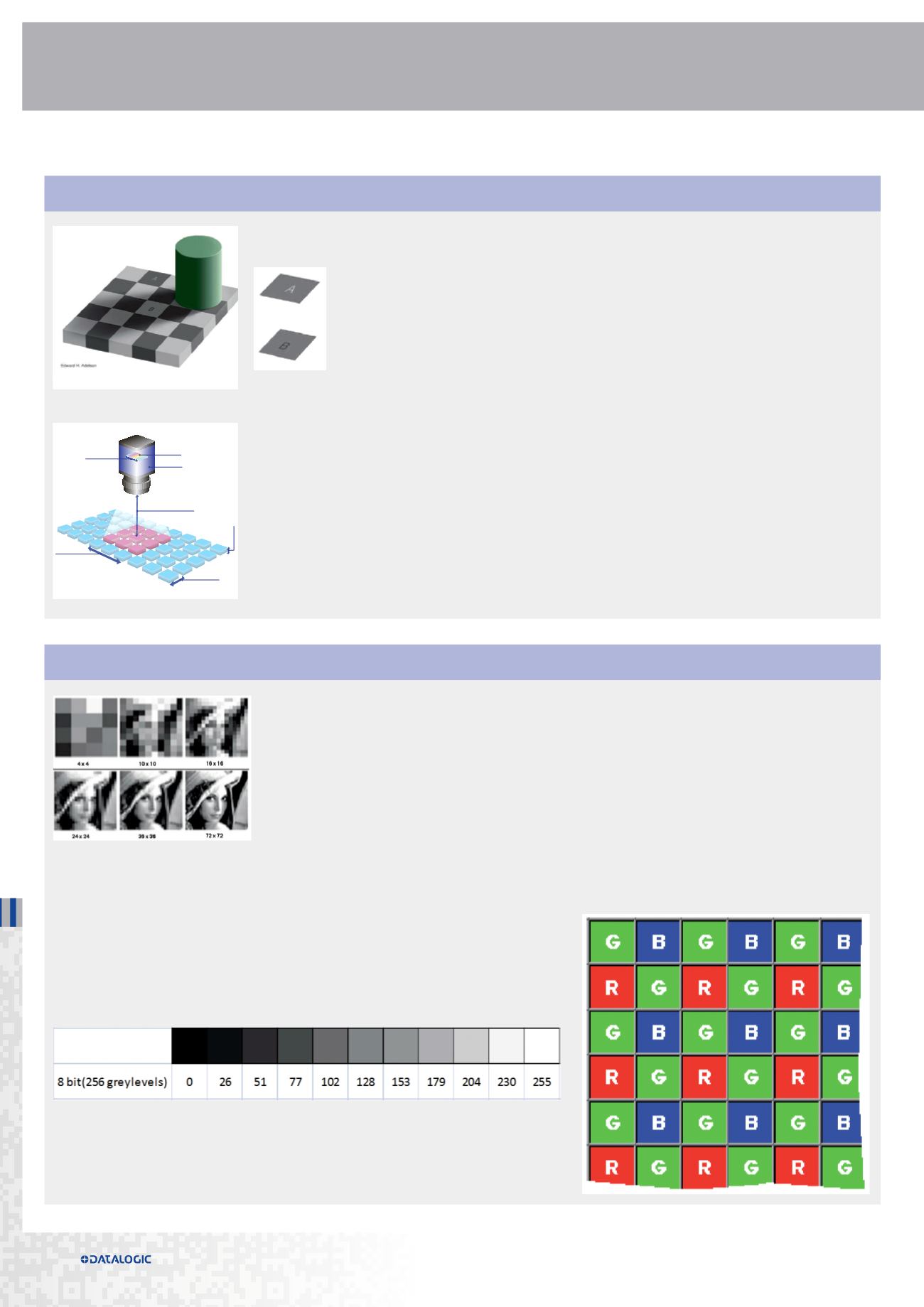

Greyscale VS Color

Most of machine vision applications are solved using greyscale cameras. In a greyscale

image the value of each pixel represents the light intensity information. The color depth

identifies the number of different intensities (i.e. shades of grey) that can be detected by

every image pixel. Color depth is typically expressed in bits or greylevels (e.g. 8 bits = 256

different shades of grey).

On the contrary color images contain 24 bits of information per pixel (as opposed to a

grayscale’s 8 bits), thus giving a color camera 3x more dynamic sensitivity. Note that most

color cameras actually use a grayscale imager with a Bayer Filter. Intensity passing through

2x2 pixel grids are interpreted and converted into a color image. Note that there are twice

as many green pixels since the human eye is most sensitive to green.

Sensor size

Camera

Sensor

Field of view

Resolution

Depth of field

Working distance